Deep Learning / Web Scraping / Google Cloud Platform

A Bot that Bird Watches so You Don’t Have To

Live Nest Cam Monitoring with a Virtual Machine

If you love birdwatching and live in the 21st century, you’ve likely experienced eagerly navigating to an online live nest camera only to find an empty nest. Wondering when the bird will return? In this article, I will describe my final project for the 12-week Metis Data Science Bootcamp that I attended January–March 2021. My project was aimed at automating nest monitoring for the Nottingham Trent University Falcon Cam. Using deep learning and automation tools, I designed a method for 24-hour bird detection that serves as an infrastructure upon which a Twitter bot or other notification system can be built to notify users when the bird enters or leaves the nest.

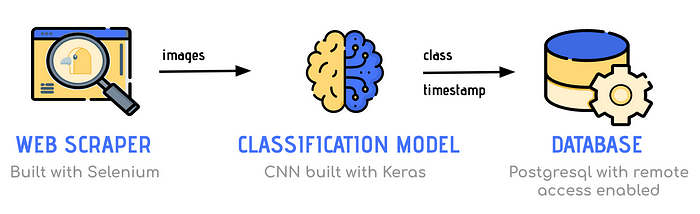

The overall goal for this project was to set up a virtual machine (VM) on Google Cloud Platform and run a script containing the following components:

- A web scraper that can go into the Nottinghamshire Wildlife Trust website and pull screenshots of the site’s live video

- A deep learning model to classify whether or not there is an adult bird in the screenshot

- A script for writing predicted classes and timestamps to an SQL database

Setting Up the Virtual Machine

The virtual machine used to run this project is hosted by Google Cloud Platform. It’s an “e2-small” machine with a Debian 10 boot disk. I configured the machine for web scraping by installing Chrome, ChromeDriver, and Selenium via command line.

Once the machine was configured, I was ready to upload the files necessary to begin scraping and classifying the status of the nest.

Creating the Web Scraper

The web scraper was written with Selenium. It is programmed to enter the website hosting the livestream, scroll to the appropriate video, start it, then take a screenshot. Below is a demo of the web scraper in action.

Assembling the Dataset

My aim was to gather about 1500 images of this nest where the positive (bird) and negative (no bird) classes would be roughly balanced.

I was able to gather frames from the nest cam from two sources: the Notts Falconcam YouTube channel, which regularly posts 24-hour time lapses of the nest, and images scraped directly from the live stream using the method described above. Once the images were collected, I labeled each image by eye as having an adult bird in the frame or not.

Finally, I partitioned the images into train and test datasets, being sure that any subsets of the data containing sequential images were confined to either the train or test set to reduce data bleed.

Image Preprocessing

Before the images can be fed into the deep learning model for training and testing, they must first go through some preprocessing steps.

To do this, I used Keras’ image preprocessing module, which allowed me to resize the images to the target size of 224 x 224 and convert them to numpy arrays. Because I used the pre-trained MobileNetV2 as a feature vector in my deep learning model (more on this in the next section), I chose to use Keras’ MobileNetV2 input preprocessing module to scale the pixel values.

The Deep Learning Model

The bird/no bird classification model was built using Keras and contains a feature vector, hidden layers, and a two-unit output layer.

Using MobileNetV2 as a feature vector allowed me to reduce the required computational time to train the model and also helped compensate for the fact that my training dataset was relatively small. With the MobileNetV2 feature vector in place, only a few additional dense and dropout layers were required to train a model with sufficient accuracy.

In code:

This model performed quite well with an 86% true positive rate and a 99% percent true negative rate, meaning that the model excels at identifying an empty nest, but sometimes misses the bird when it is in the nest. This false negative classification is more likely to occur when the bird is sitting farther away from the camera than usual, as seen in the image below.

Setting Up a SQL Database in the VM with Remote Access

With the help of this resource from Google Cloud Community, I set up PostgreSQL database that is accessible on my local machine through pgAdmin. With the script running on my VM, it is now possible for me to query the database locally to view every classification with its corresponding timestamp.

Now what?

With all of these components up and running, I’m now able to continue collecting data on this nest 24-hours a day. As far as future work, I’m waiting for my Twitter Developer account to be approved so that I can build a Twitter bot that will tweet every time it sees the bird enter or leave the nest.